Performance Testing in Software Testing – A Detailed Guide

Table of Contents

Software testing is crucial in custom software development to ensure applications function correctly and meet user expectations, identifying and fixing bugs to enhance software quality and reliability. Performance testing, a vital subset of software testing and quality assurance, assesses an application’s speed, responsiveness, and stability under various loads. Both developers and businesses must understand its importance. For developers, performance testing ensures applications handle real-world usage efficiently. For businesses, it minimizes downtime risk, enhances customer satisfaction, and supports growth by ensuring applications can scale effectively. Recognizing these benefits ensures robust, high-performing software and a competitive edge in the market.

What is Performance Testing?

Performance testing is a type of software testing and quality assurance that evaluates how an application performs under specific conditions. It focuses on assessing speed, responsiveness, stability, and scalability to ensure optimal performance even under high user loads or stress. This testing helps identify bottlenecks, verifies the system’s reliability, and ensures that the application can handle increased traffic. By using various tools, developers can simulate real-world scenarios to measure and improve the application’s performance, ultimately enhancing user experience and ensuring robust, reliable custom software development. As we look towards the future of software testing, performance testing will become even more critical in delivering high-quality applications.

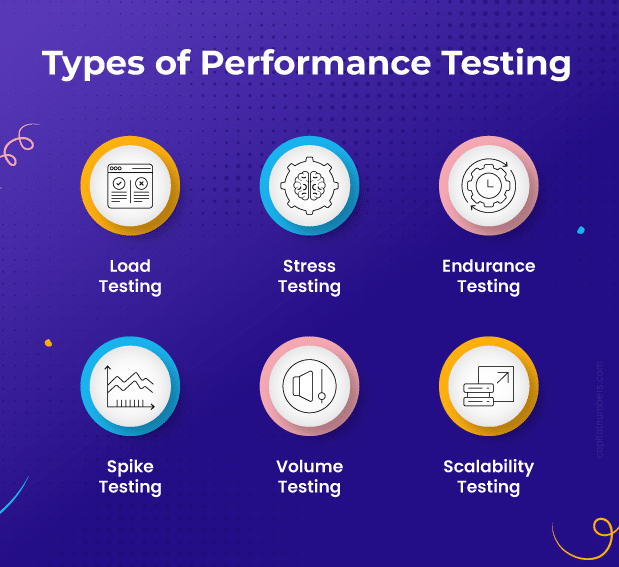

Types of Performance Testing

Performance testing encompasses various types, each designed to assess different aspects of an application’s performance. Here’s a detailed look at the major types of performance testing:

1. Load Testing

- Purpose: To determine how the application behaves under expected user loads.

- Focus: Evaluates the system’s ability to handle a specified load of users, transactions, or data volume.

2. Stress Testing

- Purpose: To find the breaking point of the application by testing it beyond normal operational capacity.

- Focus: Identifies the maximum load the system can handle before it fails or degrades significantly.

3. Endurance Testing (Soak Testing)

- Purpose: To check the system’s performance over an extended period.

- Focus: Ensures the application can handle prolonged load without memory leaks or performance degradation.

4. Spike Testing

- Purpose: To assess the system’s response to sudden and extreme increases in load.

- Focus: Determines the application’s robustness and recovery ability from unexpected spikes.

5. Volume Testing

- Purpose: To evaluate the system’s performance with a large volume of data.

- Focus: Ensures the application can handle a high amount of data and database transactions efficiently.

6. Scalability Testing

- Purpose: To measure the application’s ability to scale up or down in response to load changes.

- Focus: Ensures the system can grow with increasing user demand or shrink during off-peak times without performance issues.

Each type of performance testing plays a crucial role in ensuring that an application can meet user expectations and perform reliably under various conditions. By systematically applying these tests, developers can identify and resolve performance issues, leading to robust and scalable software solutions.

Use Cases for Different Types of Performance Testing

| Type of Performance Testing | Use Cases |

|---|---|

| Load Testing |

|

| Stress Testing |

|

| Endurance Testing (Soak Testing) |

|

| Spike Testing |

|

| Volume Testing |

|

| Scalability Testing |

|

This table provides a clear and concise view of where each type of performance testing can be effectively applied.

Tools for Different Types of Performance Testing

1. Load Testing

- Apache JMeter

- LoadRunner

- NeoLoad

2. Stress Testing

- Gatling

- LoadUI

- k6

3. Endurance Testing (Soak Testing)

- LoadRunner

- Silk Performer

- JMeter

4. Spike Testing

- BlazeMeter

- LoadNinja

- Locust

5. Volume Testing

- Data Factory

- HammerDB

- I/O Meter

6. Scalability Testing

- Apache JMeter

- LoadRunner

- BlazeMeter

What Are the Steps to Conduct Performance Testing?

Conducting performance testing involves several key steps to ensure comprehensive evaluation and accurate results. Here are the steps to follow:

1. Identify Performance Testing Goals

- Determine the objectives of the performance tests (e.g., load capacity, response time, stability).

- Define success criteria based on business requirements and user expectations.

2. Define the Test Environment

- Set up a test environment that closely mimics the production environment.

- Ensure hardware, software, network configurations, and other resources are identical to those used in production.

3. Select Performance Testing Tools

- Choose appropriate tools based on the type of performance testing and specific requirements.

- Popular tools include Apache JMeter, LoadRunner, Gatling, BlazeMeter, and Locust.

4. Plan and Design Test Scenarios

- Identify key scenarios that represent typical and peak user interactions with the application.

- Create detailed test scripts for each scenario, including user actions, data inputs, and expected outcomes.

5. Prepare Test Data

- Generate or acquire realistic test data to simulate actual usage conditions.

- Ensure data variety and volume match real-world scenarios.

6. Configure the Test Environment

- Deploy the application in the test environment.

- Configure monitoring tools to track system performance, resource utilization, and other metrics during tests.

7. Execute the Tests

- Run the tests according to the predefined scenarios and schedule.

- Start with baseline tests to establish a performance benchmark.

- Gradually increase the load to identify how the system behaves under different conditions.

8. Monitor and Record Results

- Use monitoring tools to capture performance metrics such as response times, throughput, CPU and memory usage, and error rates.

- Record test results for analysis.

9. Analyze Test Results

- Compare the results against the defined success criteria.

- Identify any performance bottlenecks, limitations, or areas of concern.

- Analyze trends and patterns to understand performance behavior.

10. Identify and Implement Improvements

- Based on the analysis, pinpoint the root causes of performance issues.

- Implement necessary optimizations, such as code improvements, database tuning, or infrastructure upgrades.

11. Retest to Validate Improvements

- Rerun the performance tests to verify that the implemented changes have resolved the identified issues.

- Ensure that the application meets the performance goals and criteria.

12. Report Findings

- Document the test process, results, and any performance issues identified.

- Provide recommendations for further improvements or maintenance.

- Share the report with stakeholders for review and decision-making.

13. Continuous Performance Monitoring

- Establish a plan for ongoing performance monitoring in the production environment.

- Regularly review and update performance tests to accommodate changes in the application or user behavior.

By following these steps, software developers can effectively conduct performance testing to ensure that the application meets performance requirements and delivers a reliable, efficient user experience.

Key Metrics in Performance Testing

1. Response Time

- Definition: Response time refers to the total time taken for a system to respond to a user’s request. It is a critical metric as it directly impacts user satisfaction.

- Measurement: Response time is measured from the moment a user sends a request until the first byte of the response is received.

2. Throughput

- Definition: Throughput is the number of transactions a system can process within a given time frame. It indicates the capacity of the system to handle user requests.

- Measurement: Throughput is typically measured in transactions per second (TPS) or requests per second (RPS). Performance testing tools can help measure throughput by simulating concurrent users and recording the number of successful transactions over time.

3. CPU and Memory Utilization

- Definition: CPU utilization refers to the percentage of the CPU’s capacity being used, while memory utilization refers to the amount of RAM being used by the application. High utilization rates can indicate potential bottlenecks.

- Measurement: These metrics are measured using various monitoring tools. They track the percentage of CPU and memory resources consumed during different stages of the performance test.

4. Concurrency and User Load

- Definition: Concurrency refers to the number of simultaneous users or processes that the system can handle, while user load is the number of users accessing the system at any given time.

- Measurement: Concurrency and user load are measured by simulating multiple users accessing the system simultaneously. Tools like LoadRunner, JMeter, and Locust allow testers to define the number of concurrent users and analyze how the system performs as the load increases.

4. Error Rate

- Definition: Error rate is the percentage of requests that fail or return errors during a performance test. A high error rate can indicate problems with the application’s stability and reliability.

- Measurement: Error rate is measured by counting the number of failed requests or transactions during the test and dividing it by the total number of requests or transactions. Performance testing tools provide detailed reports on the types and frequency of errors encountered during the test.

Challenges in Performance Testing

1. Environment Configuration Issues

Matching the test environment to the production environment is essential but challenging. Discrepancies in hardware, network configurations, or software versions can lead to inaccurate results, making this setup resource-intensive and costly.

2. Accurate Simulation of Real-world Scenarios

Simulating real-world user behavior accurately requires detailed understanding and time-consuming script creation. Inaccurate simulations can produce misleading results, giving a false sense of security about the application’s performance.

3. Data Management

Handling large volumes of realistic test data is complex, especially with data privacy regulations like GDPR or CCPA. Ensuring data freshness and compliance is crucial to avoid skewed test results and overlooked issues.

4. Continuous Integration and Continuous Delivery (CI/CD) Integration

Incorporating performance testing into CI/CD pipelines is challenging due to the time-consuming nature of these tests. Balancing comprehensive testing with delivery speed requires careful planning to avoid bottlenecks in the development process.

5. Analyzing and Interpreting Results

Performance tests generate vast amounts of data, making analysis complex. Understanding the interplay between metrics like response time and throughput is crucial. Misinterpreting results can lead to incorrect conclusions, potentially overlooking critical issues.

Addressing these challenges is key to ensuring effective performance testing and robust, reliable software.

Future Trends in Performance Testing

1. AI and Machine Learning in Performance Testing

AI and machine learning are automating and optimizing performance testing by analyzing historical data, predicting bottlenecks, and adapting simulations. These technologies enable continuous testing and proactive performance management, reducing the time and effort needed to identify and resolve issues.

2. Performance Testing for Microservices and Containers

Microservices and containers require performance testing to adapt to their dynamic, scalable nature. This involves testing individual services and their interactions, using tools that simulate real-world scenarios in container orchestration platforms like Kubernetes, ensuring optimal performance in production environments.

3. Integrating Performance Testing in DevOps

Integrating performance testing into the DevOps pipeline ensures continuous delivery and deployment. Automated performance tests within CI/CD workflows provide immediate feedback, allowing early detection and resolution of performance issues, promoting continuous improvement and maintaining software quality.

4. Cloud-native Performance Testing

Cloud-native performance testing simulates cloud-specific conditions such as auto-scaling and multi-region deployments. Utilizing cloud-based testing services leverages the cloud’s scalability and flexibility, enabling extensive performance tests and ensuring applications are optimized for cloud environments.

These trends reflect the need for more sophisticated and adaptive performance testing methods, essential for maintaining high performance and reliability in complex and dynamic environments.

You May Also Read: Elevating Software Quality Through Black Box Testing

Conclusion

Performance testing is essential for ensuring that applications meet user expectations for speed, responsiveness, and stability. By understanding and addressing the challenges and leveraging future trends like AI, microservices, DevOps integration, and cloud-native testing, organizations can deliver robust, high-performing software. Effective performance testing not only enhances user satisfaction but also supports business growth and scalability.

Stay ahead of the curve by incorporating advanced performance testing strategies into your development process. Contact our expert team today to learn how we can help you optimize your application’s performance and ensure a seamless user experience.

Web Development

Web Development Cloud Engineering

Cloud Engineering Mobile App Development

Mobile App Development AI/ML/GenAI

AI/ML/GenAI E-commerce

E-commerce Software Development

Software Development UI/UX

UI/UX QA

QA Dedicated Teams

Dedicated Teams